Pretty much anything Google’s most popular engineer Matt Cutts says makes headlines in the SEO community, but often his Webmaster Chat videos and advice aren’t mind-blowing by any stretch of the imagination. For instance, we recently covered a video where Cutts explained that bad grammar in the comment section most likely won’t hurt your ranking (unless you allow spam to run rampant).

Pretty much anything Google’s most popular engineer Matt Cutts says makes headlines in the SEO community, but often his Webmaster Chat videos and advice aren’t mind-blowing by any stretch of the imagination. For instance, we recently covered a video where Cutts explained that bad grammar in the comment section most likely won’t hurt your ranking (unless you allow spam to run rampant).

For content creators, it was a legitimate concern that poorly written contents might negate the hard work putting into writing legible and well-constructed content. However, many used this to run headlines that Google doesn’t care about grammar, which is not even close to being confirmed.

As Search Engine Land points out, way back in 2011, Cutts publicly stated that there is a correlation between spelling and PageRank, but Google does not use grammar as a “direct signal.” But, in his latest statement on the issue Cutts specifies that you don’t need to worry about the grammar in your comments “as long as the grammar on your own page is fine.” This suggests Google does in fact care about the level of writing you are publishing.

It is unclear exactly where the line is for Google at the moment, as they imply that grammar within your content does matter, but they have never stated it is a ranking signal. Chances are a typo or two won’t hurt you, but it is likely Google may punish pages with rampant errors and legibility issues.

On the other hand, Bing has recently made it pretty clear that they do care about technical quality in content as part of their ranking factors. Duane Forrester shared a blog post on the Bing Webmaster Blog which states, “just as you’re judging others’ writing, so the engines judge yours.”

Duane continues, “if you [as a human] struggle to get past typos, why would an engine show a page of content with errors higher in the rankings when other pages of error free content exist to serve the searcher?”

In the end, it all comes down to search engines trying to provide the best quality content they can. The search engines don’t want to direct users to content that will be hard to make sense of, and technical errors can severely impact a well thought-out argument.

As always, the best way to approach the issue is to simply write content for your readers. If your content can communicate clearly to your audience, the search engines shouldn’t have any problems with it. But, if a real person has trouble understanding you, the search engines aren’t going to do you any favors.

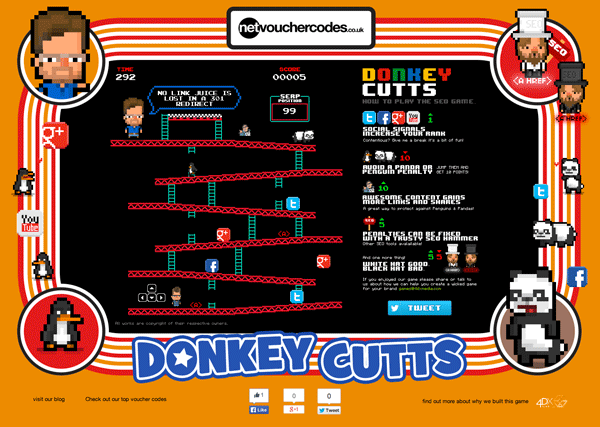

Usually Matt Cutts, esteemed Google engineer and head of Webspam, uses his regular videos to answer questions which can have a huge impact on a site’s visibility. He recently answered questions about using the Link Disavow Tool if you haven’t received a manual action, and he often delves into linking practices which Google views as spammy. But, earlier this week he took to YouTube to answer a simple question and give a small but unique tip webmasters might keep in mind in the future.

Usually Matt Cutts, esteemed Google engineer and head of Webspam, uses his regular videos to answer questions which can have a huge impact on a site’s visibility. He recently answered questions about using the Link Disavow Tool if you haven’t received a manual action, and he often delves into linking practices which Google views as spammy. But, earlier this week he took to YouTube to answer a simple question and give a small but unique tip webmasters might keep in mind in the future.

There has been quite a bit of speculation ever since Matt Cutts publicly stated that Google wouldn’t be updating the PageRank meter in the Google Toolbar before the end of the year. PageRank has been assumed dead for a while, yet Google refuses to issue the death certificate by assuring us they currently have no plans to outright scrape the tool.

There has been quite a bit of speculation ever since Matt Cutts publicly stated that Google wouldn’t be updating the PageRank meter in the Google Toolbar before the end of the year. PageRank has been assumed dead for a while, yet Google refuses to issue the death certificate by assuring us they currently have no plans to outright scrape the tool.