The Washington Post may not be the first organization you imagine when you think about SEO experts, but as a popular news organization read by millions around the world, The Post has dealt with its fair share of issues in developing its long-term strategies for web performance and SEO.

Now, the news site is sharing the fruit of that hard work by releasing its own Web Performance and SEO Best Practices and Guidelines.

These guidelines help ensure that The Washington Post remains competitive and visible in highly competitive search spaces, drives more organic traffic, and maintains a positive user experience on its website.

In the announcement, engineering lead Arturo Silva said:

“We identified a need for a Web Performance and SEO engineering team to build technical solutions that support the discovery of our journalism, as the majority of news consumers today read the news digitally. Without proper SEO and web performance, our stories aren’t as accessible to our readers. As leaders in engineering and media publishing, we’re creating guidelines that serve our audiences and by sharing those technical solutions in our open-source design system, we are providing tools for others to certify that their own site practices are optimal.”

What’s In The Washington Post’s SEO and Web Performance Guidelines?

If you’re hoping to see a surprise trick or secret tool being used by The Washington Post, you are likely to be disappointed.

The guidelines are largely in line with practices used by most SEO experts, albeit with a specific focus on their specific search and web performance issues.

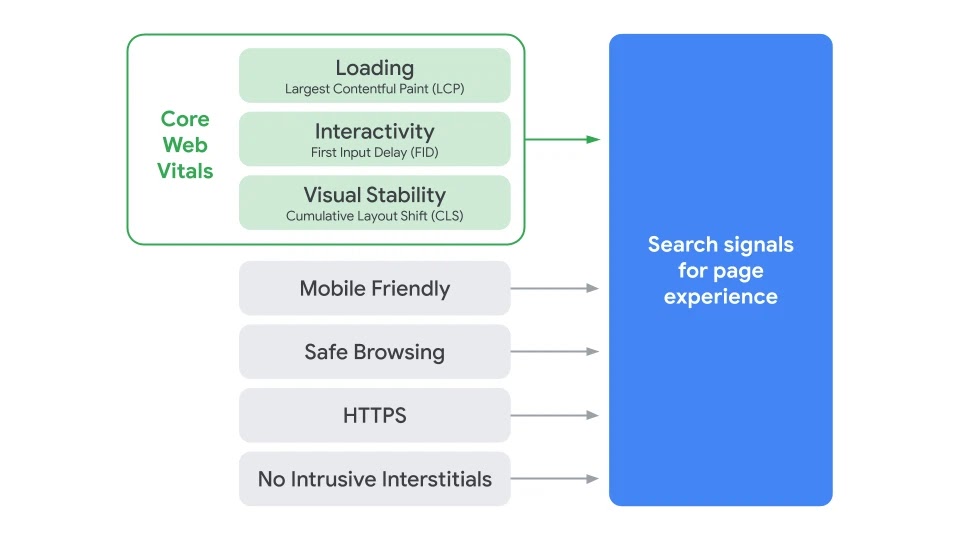

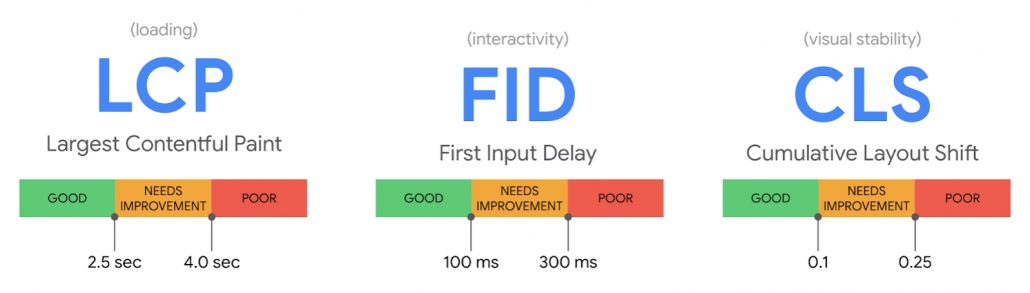

For example, the Web Performance section covers three specific areas: loading performance, rendering performance, and responsiveness. Similarly, the SEO guidelines are split into on-page SEO, content optimization, technical SEO, and off-page SEO.

More than anything, the guidelines highlight the need for brands to focus their SEO efforts on their unique needs and goals and develop strategies that are likely to remain useful for the foreseeable future (instead of chasing every new SEO trend).

To read the guidelines for yourself, visit the Washington Post’s site here.