If you’ve spent much time trying to promote your business on Facebook, you’ve probably recognized the social platform isn’t exactly the best at transparency.

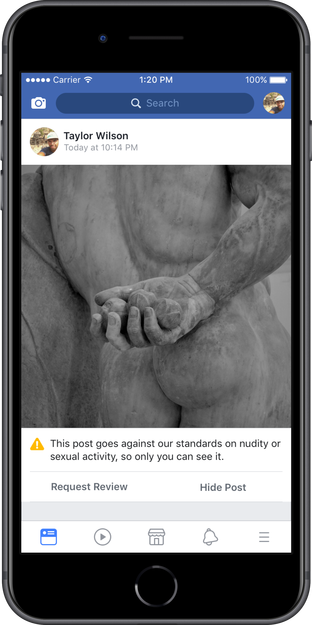

There are a lot of questions about what exactly you can and can’t post, which made it even more frustrating that there was no way to appeal the decision if Facebook decided to remove your content for violating its hidden guidelines.

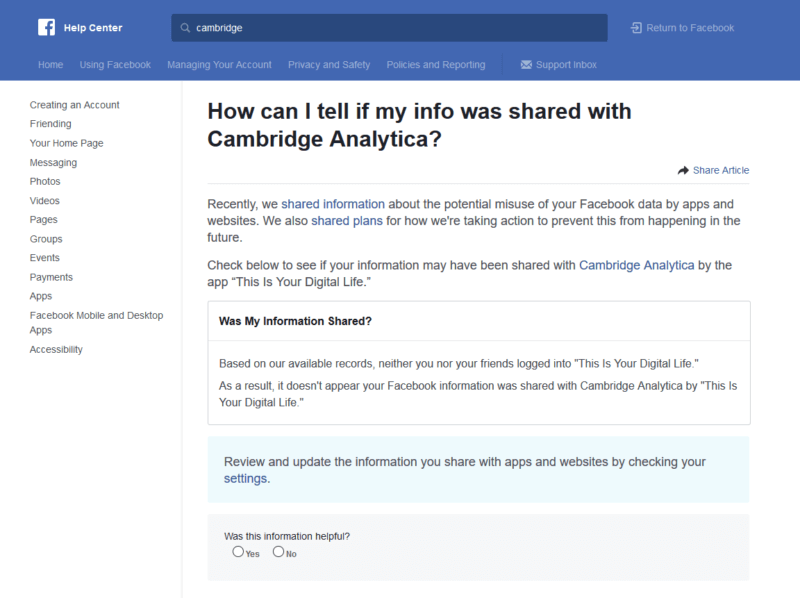

That is beginning to change, however. Likely thanks to months of criticism and controversy due to Facebook’s lack of transparency and it’s reckless handling of users’ data, Facebook has been making several big changes to increase transparency and regain people’s trust.

The latest move in this direction is the release of Facebook’s entire Community Standards guidelines available to the public for the first time in the company’s history.

These guidelines have been used internally for years to moderate comments, messages, and images posted by users for inappropriate content. A portion of the Community Standards was also leaked last year by The Guardian.

The 27-page long set of guidelines covers a wide range of topics, including bullying, violent threats, self-harm, nudity, and many others.

“These are issues in the real world,” said Monika Bickert, head of global policy management at Facebook, told a room full of reporters. “The community we have using Facebook and other large social media mirrors the community we have in the real world. So we’re realistic about that. The vast majority of people who come to Facebook come for very good reasons. But we know there will always be people who will try to post abusive content or engage in abusive behavior. This is our way of saying these things are not tolerated. Report them to us, and we’ll remove them.”

The guidelines also apply to every country where Facebook is currently available. As such, the guidelines are available in more than 40 languages.

The rules also apply to Facebook’s sister services like Instagram, however, there are some tweaks across the different platforms. For example, Instagram does not require users to share their real name.

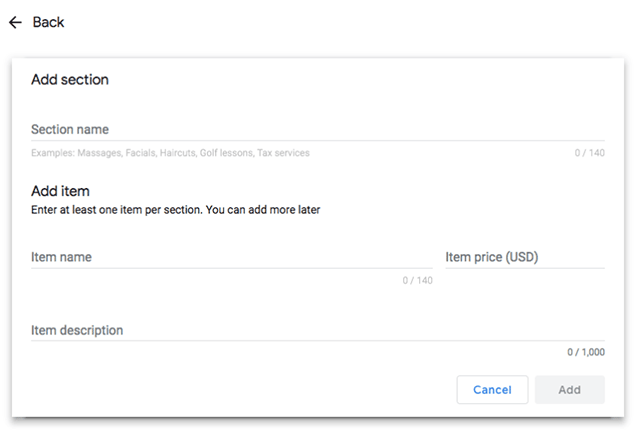

In addition to this release, Facebook is also introducing plans for an appeals process for takedowns made incorrectly. This will allow the company to address content that may be appropriate based on context surrounding the images.

If your content gets removed, Facebook will now personally notify you through your account. From there, you can choose to request a review, which will be conducted within 24 hours. If Facebook decides the takedown was enacted incorrectly, it will restore the post and notify you of the change.