Google is rolling out a new addition to its “About this result” feature in search results which will explain why the search engine chose a specific result to rank.

The new section, called “Your search & this result” explains the specific factors which made Google believe a specific page may have what you’re looking for.

This can include a number of SEO factors, ranging from the keywords which matched with the page (including related but not directly matching terms), backlink details, related images, location-based information, and more.

How Businesses Can Use This Information

For users, this feature can help understand why they are seeing specific search results and even provide tips for refining their search for better results.

The unspoken utility of this tool for businesses is glaringly obvious, however.

This feature essentially provides an SEO report card, showing exactly where you are doing well on ranking for important keywords. By noting what is not included, you can also get an idea of what areas could be improved to help you rank better in the future.

Taking this even further, you could explore the details for other pages ranking for your primary keywords, helping you better strategize to overtake your competition.

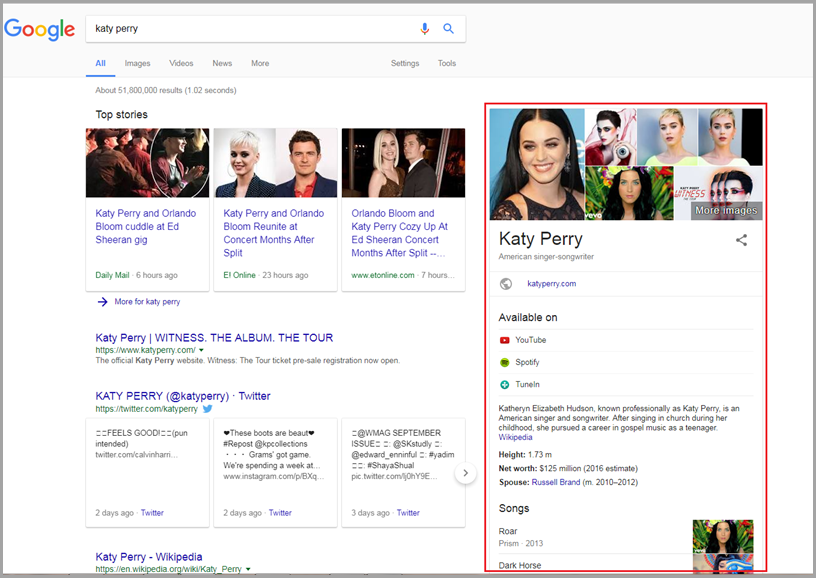

What It Looks Like

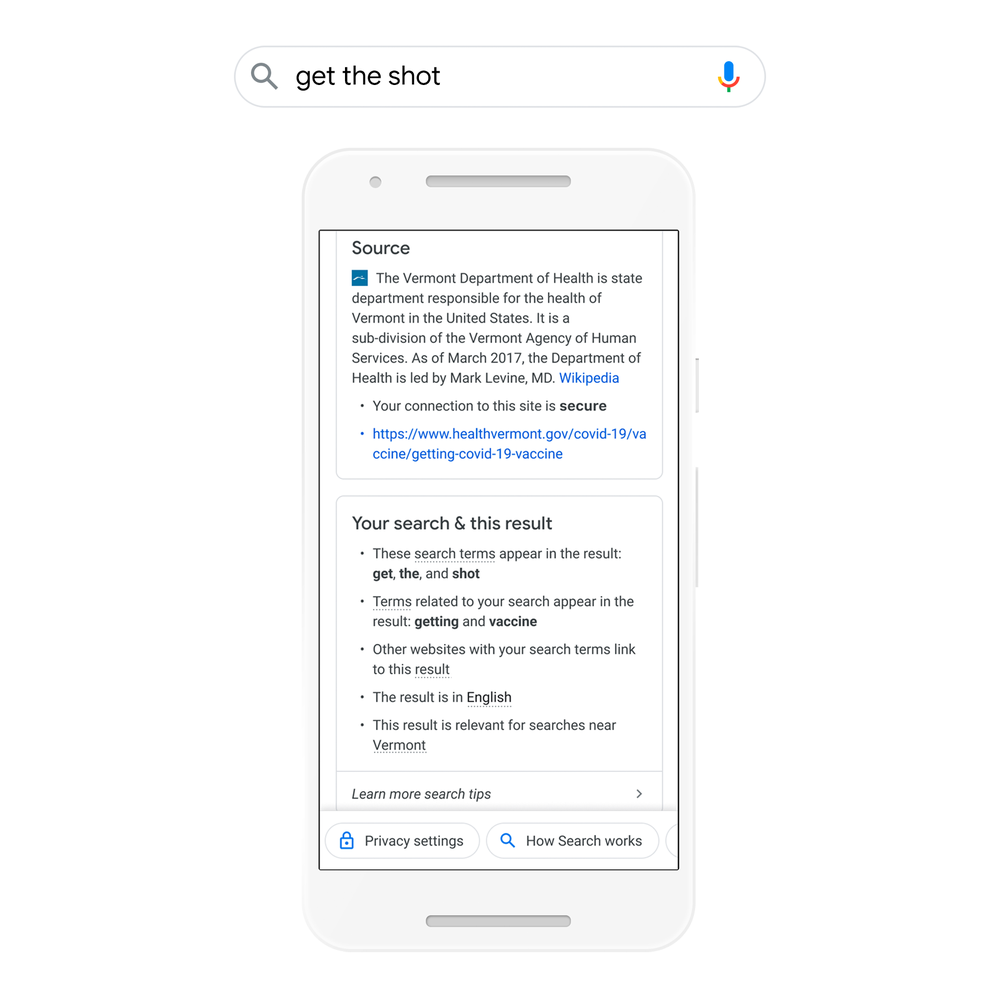

Below, you can see a screenshot of what the feature looks like in action:

The information box provides a quick bullet point list of several factors which caused the search engine to return the specific result.

While Google only detailed a few of the possible details the box may include, users around the web have reported seeing information about all of these factors included:

- Included search terms: Google can show which exact search terms were matched with the content or HTML on the related page. This includes content that is not typically visible to users, such as the title tag or meta data.

- Related search terms: Along with the keywords which were directly matched with the related page, Google can also show “related” terms. For example, Google knew to include results related to the Covid vaccine based on the keyword “shot”.

- Other websites link to this page: The search engine may choose to highlight a page which might otherwise appear unrelated because several pages using the specific keyword linked to this specific page.

- Related images: If the images are properly optimized, Google may be able to identify when images on a page are related to your search.

- This result is [Language]: Obviously, users who don’t speak or read your language are unlikely to have much use for your website or content. This essentially notes that the page is in the same language you use across the rest of Google.

- This result is relevant for searches ih [Region]: Lastly, the search engine may note if locality helped influence its search result based on other contextual details. For example, it understood that the user in Vermont, was likely looking for nearby results when searching “get the shot”.

The expanded “About this result” section is rolling out to English-language U.S. users already and is expected to be widely available across the country within a week. From there, Google says it will work to bring the feature to more countries and languages soon.