Yesterday we reported on the mass hijacking of thousands of Google+ Local listings. In short, over a short period of time a huge number of hotels with business listings for Google Maps and Search. The story was broke open by Danny Sullivan from Search Engine Land, who attempted to track down the source of the spam attack, with no concrete evidence to suggest who the culprit actually is.

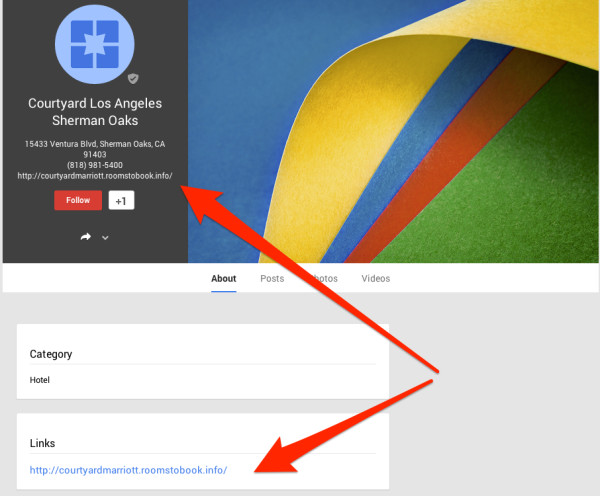

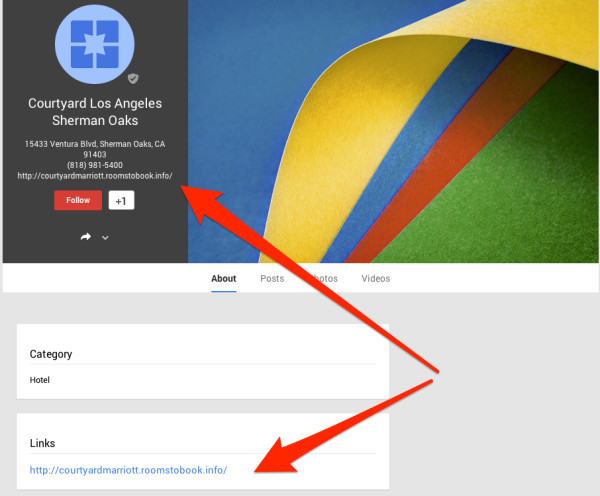

While the issue could have a big affect on many businesses it the hotel sector, it is more notable for showing that other attacks could happen in the future. Even worse, no one outside of Google has been able to explain how this could occur, especially with the number of big hotel chains affected. The hotels hit with the spam weren’t mom-and-pop bed and breakfast places. Most of the listings were for huge hotel chains, such as the Marriott hotel shown in the example of a hijacked link below.

If Google does know how this was able to happen, they aren’t telling. In fact, Google has been terribly quiet on the issue. They’ve yet to issue an official public statement, aside from telling Sullivan that he could confirm they were aware of the problem and working to resolve it.

The only direct word from Google on the hijackings is a simple response in an obscure Google Business Help thread from Google’s Community Manager, Jade Wang. If it weren’t for Barry Schwartz’s watchful eye, it is possible the statement would never have been widely seen. Wang said:

We’ve identified a spam issue in Places for Business that is impacting a limited number of business listings in the hotel vertical. The issue is limited to changing the URLs for the business. The team is working to fix the problem as soon as possible and prevent it from happening again. We apologize for any inconvenience this may have caused.

These changes won’t take place until November, but don’t expect a prompt roll-out. It is possible you may start seeing the changes starting the 11th, but more likely it will gradually appear over the span of a few days or even a couple of weeks.

These changes won’t take place until November, but don’t expect a prompt roll-out. It is possible you may start seeing the changes starting the 11th, but more likely it will gradually appear over the span of a few days or even a couple of weeks.