Google has been bringing down the hammer on spammy websites quite a bit recently with more specific penalties for sites that aren’t following guidelines. There have been several high-profile cases such as the Rap Genius penalty, and several attacks on entire spammy industries. But, if you are responsible for sites with spammy habits, a single manual action can hurt more than just one site.

Google has been bringing down the hammer on spammy websites quite a bit recently with more specific penalties for sites that aren’t following guidelines. There have been several high-profile cases such as the Rap Genius penalty, and several attacks on entire spammy industries. But, if you are responsible for sites with spammy habits, a single manual action can hurt more than just one site.

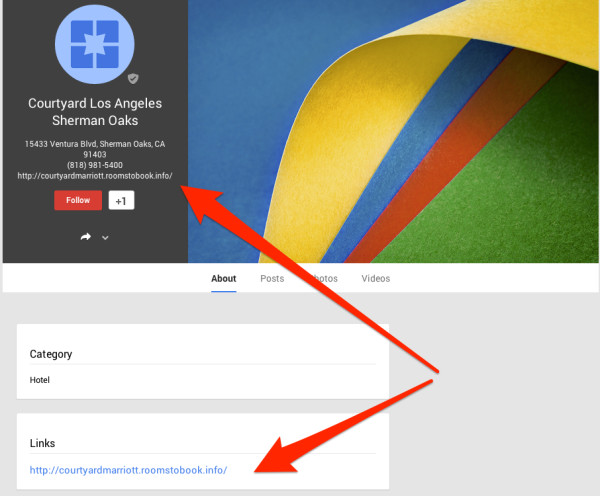

It has been suggested that Google may look at your other sites when they issue manual actions, and Matt Cutts has all but confirmed that happens at least some of the time.

Marie Haynes reached out to Cutts for help dealing with a spammy client, and his responses make it clear that the client appears to be linked to “several” spammy sites. Over the course of three tweets, Cutts makes it obvious that he has checked out many of the spammer’s sites, not just the one who has received a manual action, and he even tells one way Google can tell the sites are associated.

@Marie_Haynes e.g. notice that http://t.co/7wcwQVKaH1 has the same address and the same company registration number.

— Matt Cutts (@mattcutts) March 7, 2014

@Marie_Haynes and make sure to press your client about exactly how many "quick case" sites they own, because it appears to be several.

— Matt Cutts (@mattcutts) March 7, 2014

@Marie_Haynes so I worry that you haven't truly gotten through to your client, who shows signs of long-standing, mass, deliberate spam 🙁

— Matt Cutts (@mattcutts) March 7, 2014

Of course, Google probably doesn’t review every site penalized webmasters operate, but it shows they definitely do when the situation calls for it. If your spammy efforts are caught on one site, chances are you are making the same mistakes on almost every site you operate and they are all susceptible to being penalized. In the case of this client, it seems playing against the rules has created a pretty serious web of trouble.

Google isn’t the only search engine waging a war on black hat or manipulative SEO. Every major search engine has been adapting their services to fight against those trying to cheat their way to the top of the rankings. This week, Bing made their latest move against devious optimizers by amending their

Google isn’t the only search engine waging a war on black hat or manipulative SEO. Every major search engine has been adapting their services to fight against those trying to cheat their way to the top of the rankings. This week, Bing made their latest move against devious optimizers by amending their

Have you noticed a difference using Google on your smartphone this past week? Last week Ilya Grigorik, a Google developer advocate,

Have you noticed a difference using Google on your smartphone this past week? Last week Ilya Grigorik, a Google developer advocate,