We all know that the search results you get on mobile and the ones you get on desktop devices can be very different – even for the same query, made at the same time, in the same place, logged into the same Google account.

Have you ever found yourself asking exactly why this happens?

One site owner did and recently got the chance to ask one of Google’s Senior Webmaster Trends Analyst, John Mueller.

In the recent SEO Office Hours Session, Mueller explained that a wide range of factors decide what search results get returned for a search query – including what device you are using and why this happens.

Why Are Mobile Search Rankings Different From Desktop?

The question asked to Mueller specifically wanted to clarify why there is still a disparity between mobile and desktop search results after the launch of mobile-first indexing for all sites. Here’s what was asked:

“How are desktop and mobile ranking different when we’ve already switched to mobile-first indexing.”

Indexing and Ranking Are Different

In response to the question, Mueller first tried to clarify that indexing and rankings are not exactly the same thing. Instead, they are more like two parts of a larger system.

“So, mobile-first indexing is specifically about that technical aspect of indexing the content. And we use a mobile Googlebot to index the content. But once the content is indexed, the ranking side is still (kind of) completely separate.”

Although the mobile-first index was a significant shift in how Google brought sites into their search engine and understood them, it actually had little direct effect on most search results.

Mobile Users and Desktop Users Have Different Needs

Beyond the explanation about indexing vs. ranking, John Mueller also said that Google returns unique rankings for mobile and desktop search results because they reflect potentially different needs in-the-moment.

“It’s normal that desktop and mobile rankings are different. Sometimes that’s with regards to things like speed. Sometimes that’s with regards to things like mobile-friendliness.

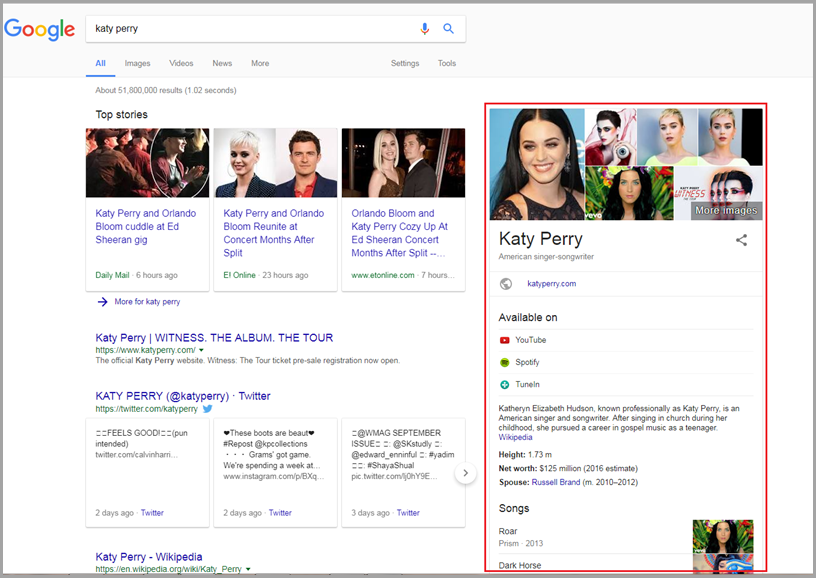

“Sometimes that’s also with regards to the different elements that are shown in the search results page.

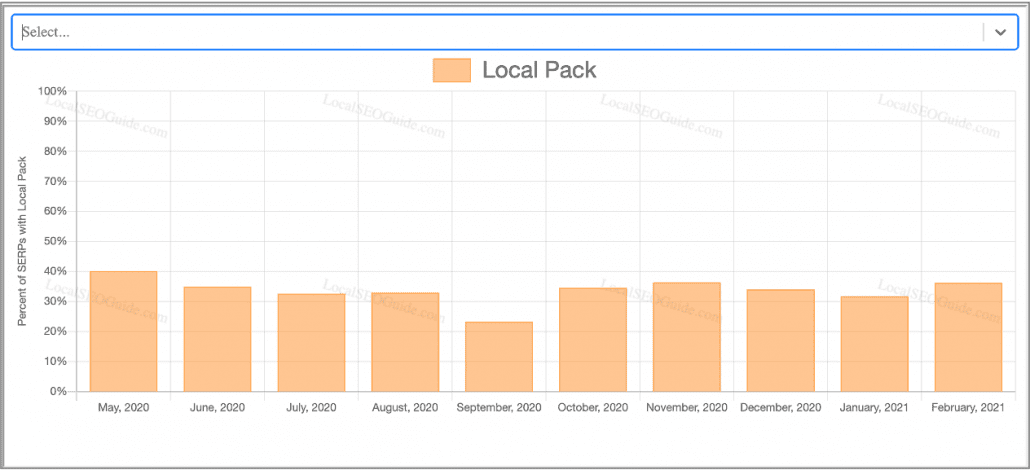

“For example, if you’re searching on your phone then maybe you want more local information because you’re on the go. Whereas if you’re searching on a desktop maybe you want more images or more videos shown in the search results. So we tend to show …a different mix of different search results types.

“And because of that it can happen that the ranking or the visibility of individual pages differs between mobile and desktop. And that’s essentially normal. That’s a part of how we do ranking.

“It’s not something where I would say it would be tied to the technical aspect of indexing the content.”

With this in mind, there’s little need to be concerned if you aren’t showing up in the same spot for the same exact searches on different devices.

Instead, watch for big shifts in what devices people are using to access your page. If your users are overwhelmingly using phones, assess how your site can better serve the needs of desktop users. Likewise, a majority of traffic coming from desktop devices may indicate you need to assess your site’s speed and mobile friendliness.

If you want to hear Mueller’s full explanation and even more discussion about search engine optimization, check out the SEO Office Hours video below: