Google has been very clear about their stance on manipulative or deceptive behavior on websites. While they can’t tackle every shady practice sites have been enacting, they have narrowed their sites on a few manipulative acts they plan on taking down.

The first warning came when Google directly stated their intention to penalize sites who direct mobile users to unrelated mobile landing pages rather than the content they clicked to access. While that frustrating practice isn’t exactly manipulative, it is an example of sites redirecting users without their consent and can be terrible to try to get out of (clicking back often just leads to the mobile redirect page, ultimately placing you back at the page you didn’t ask for in the first place).

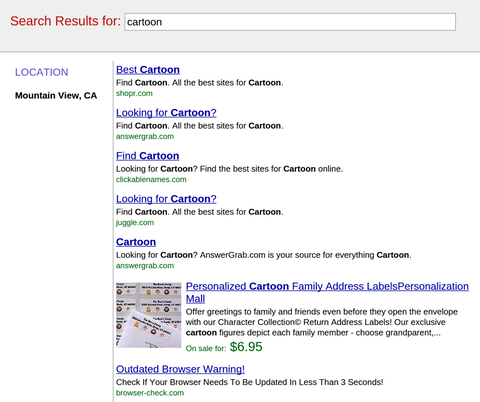

Now, Google is aiming at a similar tactic where site owners have been inserting fake pages into the browser history, so that when users attempt to exit, they are directed to a fake search results page that is entirely filled with ads or deceptive links, like the one below. It is basically a twist on the tactic which keeps placing users trying to exit back on the page they clicked to. The only way out is basically a flurry of clicks which end up putting you much further back in your history than you intended. You may not have seen it yet, but it has been popping up more and more lately.

The quick upswing is probably what raised Google’s interest in the tactic. As Search Engine Watch explains, deceptive behavior on sites has pretty much always been against Google’s guidelines and for them to make a special warning to sites adopting the practice suggests this practice is undergoing widespread dissemination to sites that are okay pushing Google’s limits.