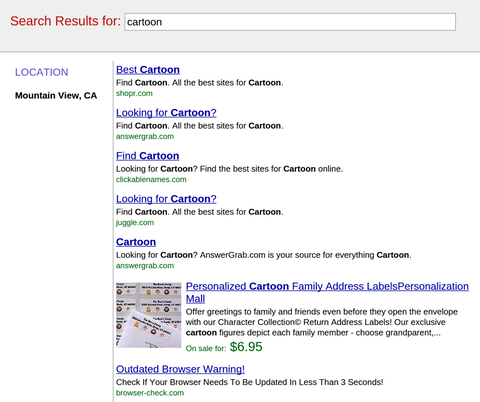

Duplicate content has always been viewed as a serious no-no for webmasters and search engines. In general, it is associated with spamming or low-quality content, and thus Google usually penalizes sites with too much duplicate content. But, what does that mean for necessary duplicate content like privacy policies, terms and conditions, and other types of legally required content that many websites must have?

This has been a bit of a reasonable point of confusion for many webmasters, and those in the legal or financial sectors especially find themselves concerned with the idea that their site could be hurt by the number of disclaimers.

Well of course Matt Cutts is here to sweep away all your concerns. He used his recent Webmaster Chat video to address the issue, and he clarified that unless you’re actively doing something spammy like keyword stuffing within these sections of legalese, you shouldn’t worry about it.

He said, “We do understand that a lot of different places across the web require various disclaimers, legal information, and terms and conditions, that sort of stuff, so it’s the sort of thing where if were to not to rank that stuff well, that would hurt our overall search quality. So, I wouldn’t stress out about that.”