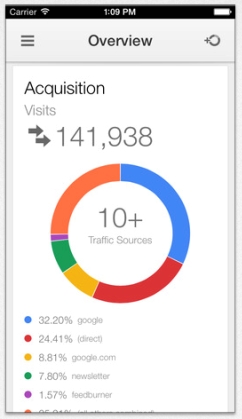

A few weeks ago, Google announced they would begin favoring sites who switch to HTTPS in search results. At the time of the announcement, most of the SEO community was skeptical at best and few believed the HTTPS ranking factor would have any effect on rankings whatsoever. Well, it has been a couple of weeks and we have the verdict.

A few weeks ago, Google announced they would begin favoring sites who switch to HTTPS in search results. At the time of the announcement, most of the SEO community was skeptical at best and few believed the HTTPS ranking factor would have any effect on rankings whatsoever. Well, it has been a couple of weeks and we have the verdict.

The skeptics were absolutely right.

SearchMetrics decided to evaluate whether HTTPS had any discernible effect on search results of any form. According to Marcus Tober of SearchMetrics, there is no data to prove HTTPS has any effect on Google rankings after the launch of the ranking factor.

In a nutshell: No relationships have been discernible to date from the data analyzed by us between HTTPS and rankings nor are there any differences between HTTP and HTTPS. In my opinion therefore, Google has not yet rolled out this ranking factor – and/or this factor only affects such a small section of the index to date that it was not possible to identify it with our data.

Tober shared his data along with his report, and it all matches all the anecdotal evidence available as well. Site owners across the web rushed to update their site to the new favored HTTPS, but there is nary a single story I could find suggesting it had any ranking influence at all.

At the time of the announcement, Google did suggest that switching over could possibly influence rankings, but they also called it a “very lightweight signal” so there’s no need to grab your pitchforks. But, these results may have some lessons for those who were expecting and easy and quick ratings boost with minimal work.