Google is sending emails to webmasters that are being migrated to the search engine’s new mobile-first index. If your site gets indexed, Google will start choosing the mobile version of your site as the default choice – meaning your site is fast enough and optimized for mobile users.

The search engine first said they would start sending notifications to websites being migrated into the mobile-first index, but the emails have only started being actually seen in the wild over the past few days.

The notifications are coming a bit late, considering Google has confirmed that it began moving websites over to the mobile-first index months ago.

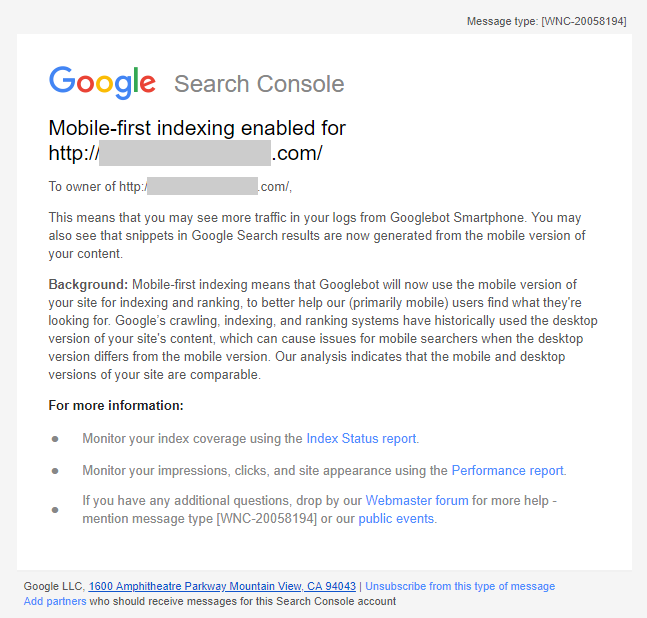

You can see a copy of the email as shared by The SEM Post or read the full text below:

”Mobile-first indexing enabled for <URL>

To owner of <URL>

This means that you may see more traffic in your logs from Googlebot Smartphone. You may also see that snippets in Google Search results are now generated from the mobile version of your content.

Background: Mobile-first indexing means that Googlebot will now use the mobile version of your site for indexing and ranking, to better help our (primarily mobile) users find what they’re looking for. Google’s crawling, indexing, and ranking systems have historically used the desktop version of your site’s content, which can cause issues for mobile searchers when the desktop version differs from the mobile version. Our analysis indicates that the mobile and desktop versions of your site are comparable.”