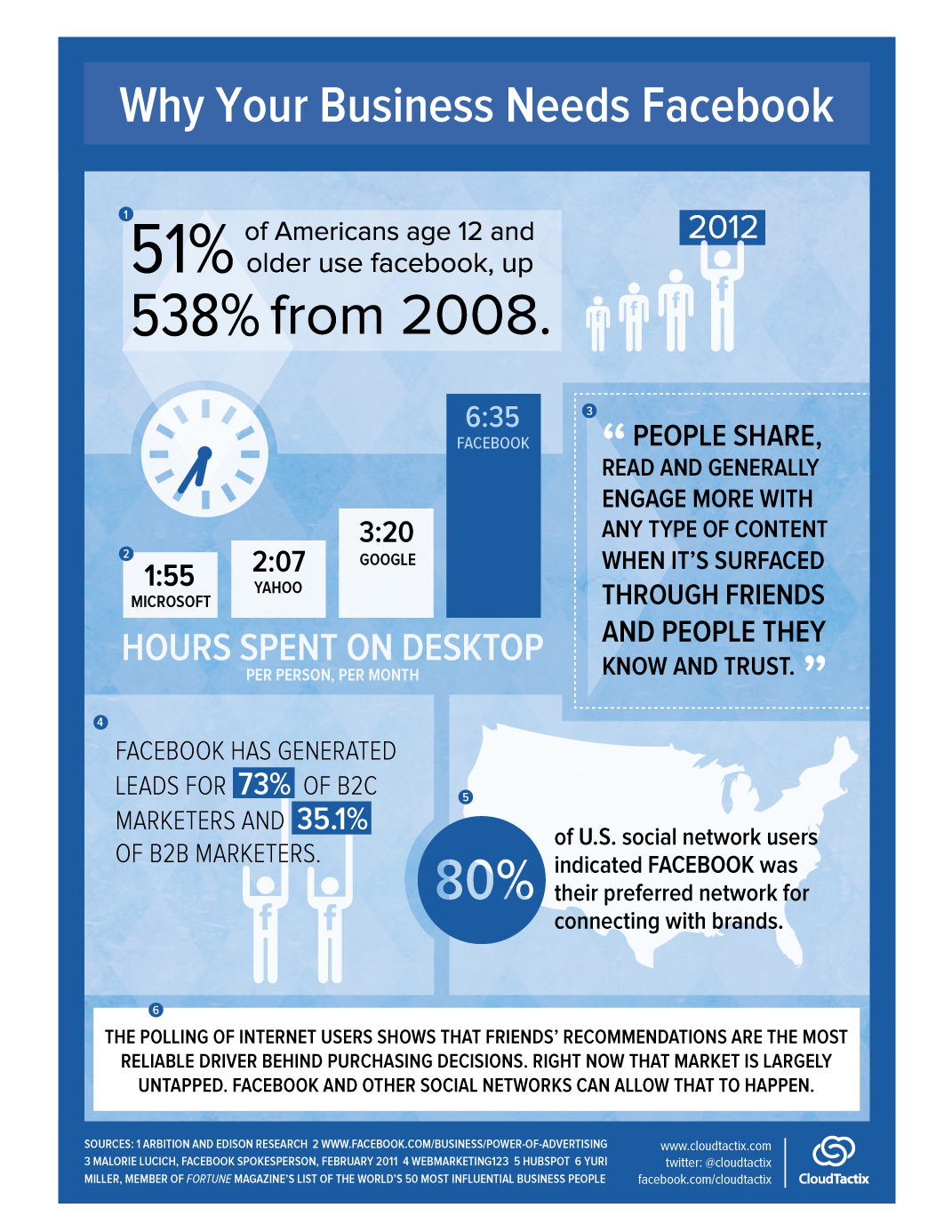

If you’ve spent much time online in the past year or two, it is almost certain you’ve come across an infographic. They are highly enjoyed by the public, as well as being educational. This is why more companies and content creators are using infographics to communicate and share knowledge with the public than ever before. Some may say it is just a trend, but either way the data shows that searches for infographics have risen over 800 percent in just two years, from 2010 to 2012.

Even if you don’t know what an infographic is, the chances still favor that you have seen one either in your Facebook feed, a news article, or maybe even your email. Infographics are images intended to share information, data, or knowledge in a quick and easily comprehensible way. They turn boring information into interesting visuals which not only make the information easier to understand, but also make the average viewer more interested in what is being communicated.

According to Albert Costill, multiple studies have found that 90 percent of the information we retain and remember is based on visual impact. Considering how much information take in on a day to day basis, and that means you’re content should be visually impressive if you want to have a hope of viewers remembering it. If you’re still unsure about infographics, there are several reasons you should consider at least including them occasionally within your content strategy.

- Infographics are naturally more eye-catching than printed words, and a well laid-out infographic will catch viewers attention in ways standard text can’t. You’re free to use more images, colors, and even movement which are more immediately visually appealing.

- The average online reader tends to scan text rather than reading every single word. Infographics combat this tendency by making viewers more likely to engage all of the information on the screen, but they also make it easier for those who still scan to find the information most important to them.

- Infographics are more easily sharable than most other types of content. Most social networks are image friendly, so users are given two very simple ways to show their friends their favorite infographics. Readers can share a link directly to your site, or they can save the image and share it directly. The more easily content can be shared, the more likely it is to go viral.

- Infographics can subliminally help reinforce your brand image, so long as you are consistent. Using consistent colors, shapes, and messages, combined with your logo all work to raise your brand awareness. You can see how well this works when you notice that every infographic relating to Facebook naturally uses “Facebook Blue” and reflects the style of their brand.

Obviously you shouldn’t be putting out an infographic every day. Blog posts still have their place in any content strategy. Plus, if you are creating infographics daily, it is likely their quality will suffer. Treat infographics as a tool that can be reserved for special occasions or pulled out when necessary. With the right balance, you’ll find your infographics can be more powerful and popular than you ever imagined.

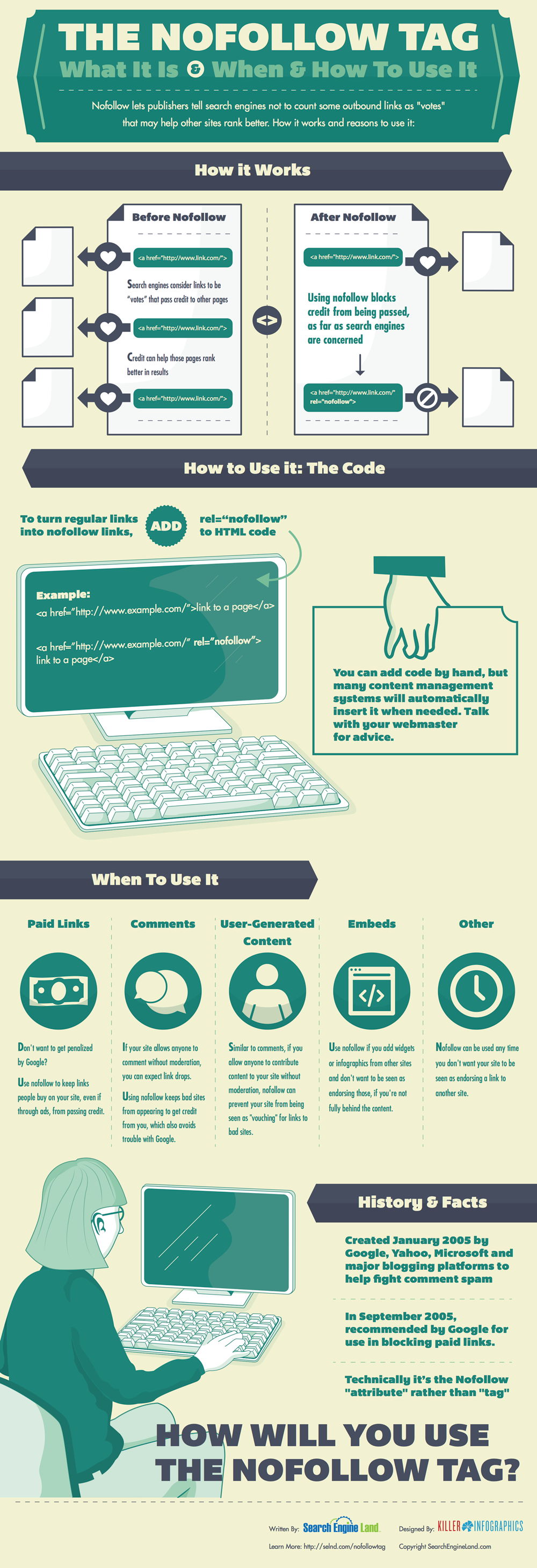

You might not have noticed, but AdWords is working a little different since an algorithm update was quietly introduced on Tuesday. For the most part, not much is different, but there is a notable change in the ad extensions are now working as a factor in determining as positioning.

You might not have noticed, but AdWords is working a little different since an algorithm update was quietly introduced on Tuesday. For the most part, not much is different, but there is a notable change in the ad extensions are now working as a factor in determining as positioning. There has been quite a bit of speculation ever since Matt Cutts publicly stated that Google wouldn’t be updating the PageRank meter in the Google Toolbar before the end of the year. PageRank has been assumed dead for a while, yet Google refuses to issue the death certificate by assuring us they currently have no plans to outright scrape the tool.

There has been quite a bit of speculation ever since Matt Cutts publicly stated that Google wouldn’t be updating the PageRank meter in the Google Toolbar before the end of the year. PageRank has been assumed dead for a while, yet Google refuses to issue the death certificate by assuring us they currently have no plans to outright scrape the tool.

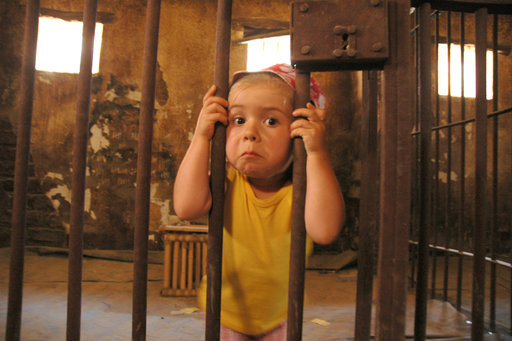

A couple weeks ago, Google released an update directly aimed at the “industry” of websites which host mugshots, which many aptly called The Mugshot Algorithm. It was one of the more specific updates to search in recent history, but was basically meant to target sides aiming to extort money out of those who had committed a crime. Google purposefully targeted those sites who were ranking well for names and displayed arrest photos, names, and details.

A couple weeks ago, Google released an update directly aimed at the “industry” of websites which host mugshots, which many aptly called The Mugshot Algorithm. It was one of the more specific updates to search in recent history, but was basically meant to target sides aiming to extort money out of those who had committed a crime. Google purposefully targeted those sites who were ranking well for names and displayed arrest photos, names, and details. There have never been more opportunities for local businesses online than now. Search engines cater more and more to local markets as shoppers make more searches from smartphones to inform their purchases. But, in the more competitive markets that also means local marketing has become quite complicated.

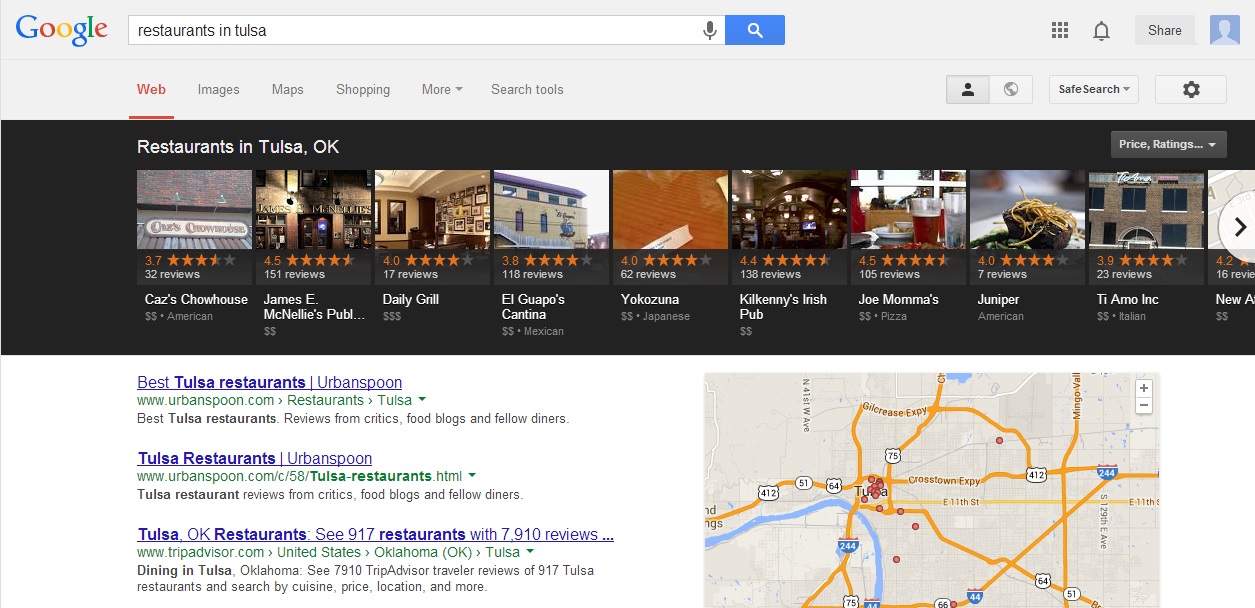

There have never been more opportunities for local businesses online than now. Search engines cater more and more to local markets as shoppers make more searches from smartphones to inform their purchases. But, in the more competitive markets that also means local marketing has become quite complicated. Google’s Carousel may seem new to most searchers, but it has actually been rolling out since June. That means enough time has past for marketing and search analysts to really start digging in to see what makes the carousel tick.

Google’s Carousel may seem new to most searchers, but it has actually been rolling out since June. That means enough time has past for marketing and search analysts to really start digging in to see what makes the carousel tick.