If you’ve ever received a notification from Google about a manual spam action based on “unnatural links” pointing to your webpage, Google has a new tool for you.

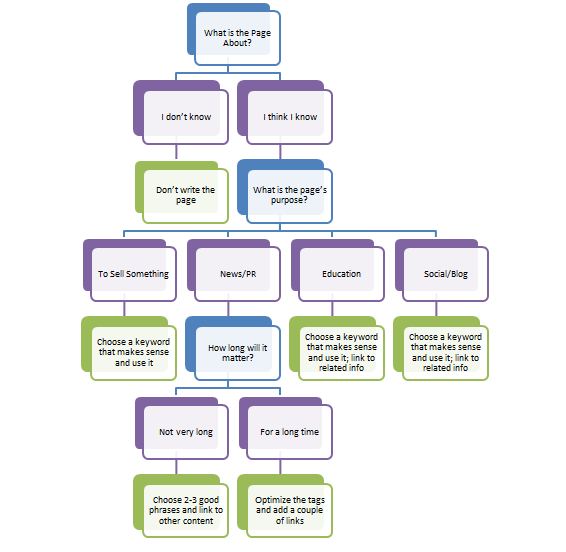

Links are one of the most known about factors Google uses to order search results, and they examine the links between sites to decide which pages are reputable. As you probably know, this is the foundation of PageRank, another of the most well-known “signals” Google uses to order search results. Google is concerned about spammers trying to take advantage of PageRank, and often they have to take manual action.

The notification you may have received in Webmaster Tools about those unnatural links suggests you got caught up in linkspam. Linkspam is the use of paid links, link exchanges, and other tactics like those. The best response to the message would be to remove as many low quality links as possible from your site. This keeps Google off of your back, and will improve the reputation of your site as a whole.

If you can’t seem to get rid of all of the links for some reason, Google’s new tool can help you out. The Disavow Links page allows you to input URLs which you would like disavowed from your site, and the “domain :” keyword will help you disavow links across all pages on a specific site.

Everyone is allowed one disavow file per website, and the file is shared among site owners through Webmaster Tools.

If you need assistance finding bad links in your site, the “Links to Your Site” feature in Webmaster Tools can also assist you in starting your search.

Google’s Webmaster Central Blog included a few quick answers in their announcement for the tool for questions you may have, noting that most sites will not need to use the feature in any way unless they’ve received a notification.