Google is always making changes and updates, but it seems like the past couple weeks have been especially crazy for the biggest search engine out there. There have been tons of changes both big and small, but best of all, they seem to all be part of one comprehensive plan with a long term strategy.

Eric Enge sums up all the changes when he says Google is pushing people away from a tactical SEO mindset to a more strategic and valuable approach. To try to understand exactly what that means going forward, it is best too review the biggest changes. By seeing what has been revamped, it is easier to make sense of what the future looks like for Google.

1. ‘(Not Provided)’

One of the hugest changes for both searchers and marketers is Google’s move to make all organic searches secure starting in late September. For users, this means more privacy when browsing, but for marketers and website owners it means we are no longer able to see keyword data from most users coming to sites from Google searches.

This means marketers and site-owners are having to deal with a lot less information, or they’re having to work much harder to get it. There are ways to find keyword data, but it’s no longer easily accessible from any Google tool.

This was one of the bigger hits for technical SEO, though there are many work arounds for those looking for them.

2. No PageRank Updates

PageRank has long been a popular tool for many optimizers, but it has also been commonly used by actual searchers to get a general idea of the quality of the sites they visit. However, Google’s Matt Cutts has openly said not to expect another update to the tool this year, and it seems it won’t be available much longer on any platform. The toolbar has never been available on Chrome, and with Internet Explorer revamping how toolbars work on the browser, it seems PageRank is going to be left without a home.

This is almost good news in many ways. PageRank has always been considered a crude measurement tool, so if the tool goes away, many will have to turn to more accurate measurements.

3. Hummingbird

Google’s Hummingbird algorithm seemed minor to most people using the search engine, but it was actually a major overhaul under the hood. Google vastly improved their abilities at understanding conversational search that entirely changes how people can search.

The most notable difference with Hummingbird is Google’s ability to contextualize searches. If you search for a popular sporting arena, Google will find you all the information you previously would have expected, but if you then search “who plays there”, you will get results that are contextualized based on your last search. Most won’t find themselves typing these kinds of searches, but for those using their phones and voice capabilities, the search engine just got a lot better.

For marketers, the consequences are a bit heavier. Hummingbird greatly changes the keyword game and has huge implications for the future. With the rise of conversational search, we will see that exact keyword matches become less relevant over time. We probably won’t feel the biggest effects for at least a year, but this is definitely the seed of something huge.

4. Authorship

Authorship isn’t exactly new, but it has become much more important over the past year. As Google is able to recognize the creators of content, they are able to begin measuring which authors are consistently getting strong responses such as likes, comments, and shares. This means Google will be more and more able to filter those who are creating the most valuable content and rank them highest, while those consistently pushing out worthless content will see their clout dropping the longer they fail to actually contribute.

5. In-Depth Articles

Most users are looking for quick answers to their questions and needs with their searches, but Google estimates that “up to 10% of users’ daily information needs involve learning about a broad topic.” To reflect that, they announced a change to search in early August, which would implement results for more comprehensive sources for searches which might require more in-depth information.

What do these all have in common?

These changes may all seem separate and unique, but there is an undeniably huge level of interplay between how all these updates function. Apart, they are all moderate to minor updates. Together, they are a huge change to search as we know it.

We’ve already seen how link building and over-attention to keywords can be negative to your optimization when improperly managed, but Google seems keen on devaluing these search factors even more moving forward. Instead, they are opting for signals which offer the most value to searchers. Their search has become more contextual so users can find their answers more easily, no matter how they search. But, the rankings are less about keywords the more conversational search becomes.

In the future, expect Google to place more and more emphasis on authorship and the value that these publishers are offering to real people. Optimizers will always focus on pleasing Google first and foremost, but Google is trying to synergize these efforts so that your optimization efforts are improving the experience of users as well.

A couple weeks ago, Google released an update directly aimed at the “industry” of websites which host mugshots, which many aptly called The Mugshot Algorithm. It was one of the more specific updates to search in recent history, but was basically meant to target sides aiming to extort money out of those who had committed a crime. Google purposefully targeted those sites who were ranking well for names and displayed arrest photos, names, and details.

A couple weeks ago, Google released an update directly aimed at the “industry” of websites which host mugshots, which many aptly called The Mugshot Algorithm. It was one of the more specific updates to search in recent history, but was basically meant to target sides aiming to extort money out of those who had committed a crime. Google purposefully targeted those sites who were ranking well for names and displayed arrest photos, names, and details. There have never been more opportunities for local businesses online than now. Search engines cater more and more to local markets as shoppers make more searches from smartphones to inform their purchases. But, in the more competitive markets that also means local marketing has become quite complicated.

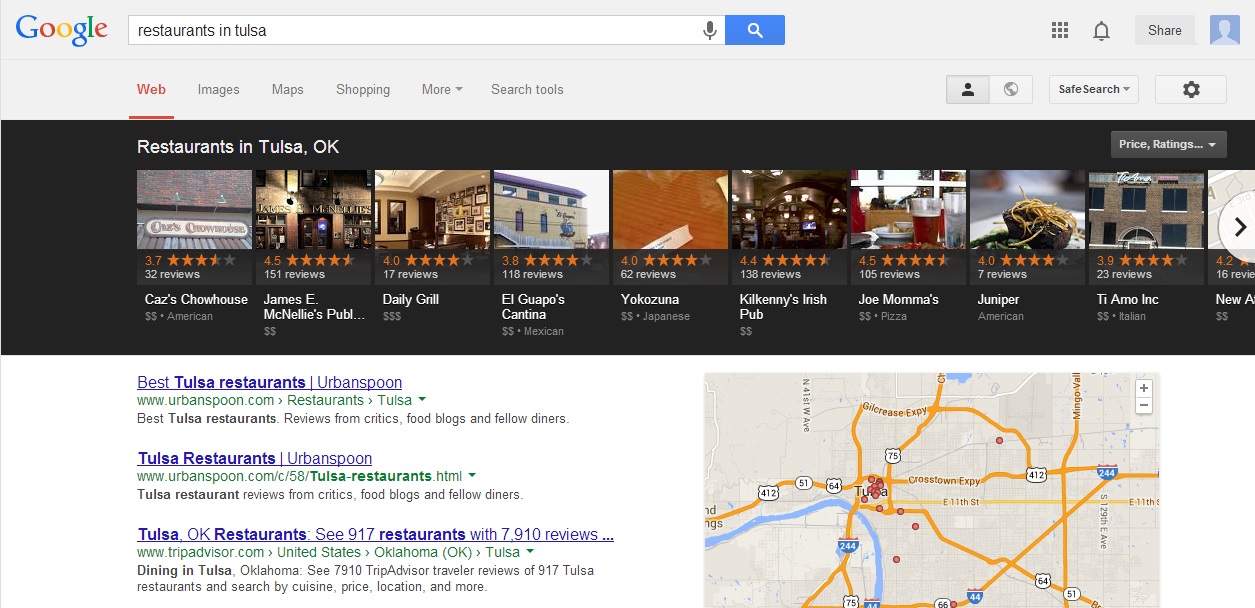

There have never been more opportunities for local businesses online than now. Search engines cater more and more to local markets as shoppers make more searches from smartphones to inform their purchases. But, in the more competitive markets that also means local marketing has become quite complicated. Google’s Carousel may seem new to most searchers, but it has actually been rolling out since June. That means enough time has past for marketing and search analysts to really start digging in to see what makes the carousel tick.

Google’s Carousel may seem new to most searchers, but it has actually been rolling out since June. That means enough time has past for marketing and search analysts to really start digging in to see what makes the carousel tick. Many considered it only a matter of time before advertising would find its way onto Instagram, since Facebook purchased the app. However it took much longer than most expected. Instagram has remained ad-less until now, but over the next few months you will finally see that change. Instagram

Many considered it only a matter of time before advertising would find its way onto Instagram, since Facebook purchased the app. However it took much longer than most expected. Instagram has remained ad-less until now, but over the next few months you will finally see that change. Instagram  Google AdWords announced yesterday a major reporting update to conversion tracking called Estimated Total Conversions will be rolling out over the next few weeks. The new feature provides estimates of conversions which take place over multiple devices and adds this to the conversion reporting we are already accustomed to.

Google AdWords announced yesterday a major reporting update to conversion tracking called Estimated Total Conversions will be rolling out over the next few weeks. The new feature provides estimates of conversions which take place over multiple devices and adds this to the conversion reporting we are already accustomed to. Last week SEO and online marketing professionals

Last week SEO and online marketing professionals