A representative from Google announced the search engine began rolling out a broad core update (appropriately titled the June 2021 Core Update) this week. Surprisingly, the announcement also revealed a second update is expected to roll out next month.

Note that this is not the Page Experience Update which Google is planning to launch in mid-June.

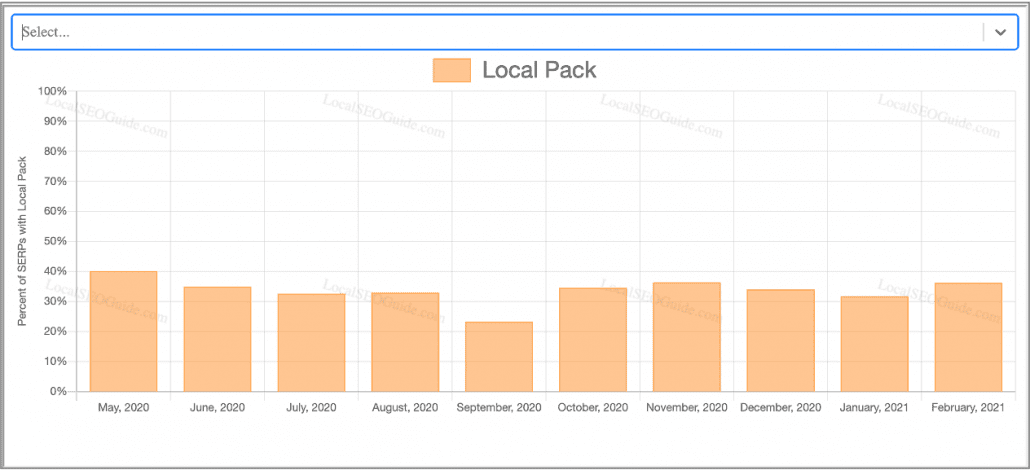

Typically, Google rolls out a broad core update every few months. For example, the last update before this came nearly six months ago, in December 2020. The gap between updates before that was even longer, with the previous update arriving in May 2020.

Obviously, this raises some questions about why the company felt the need to start releasing a two-part algorithm now, rather than waiting to roll it all out at once next month.

Google being Google, details about what the broad core updates will change are relatively scant. Still, here’s what we do know:

Why Two Core Updates?

Based on statements from Google liaison Danny Sullivan and others, it seems the search engine simply didn’t want to sit on some of the completed updates while it waited for the rest to be finalized.

Sullivan did note that some effects from the first part of the update may be temporary, however, as the second part rolls out.

“Of course, any core update can produce drops or gains for some content. Because of the two-part nature of this release, it’s possible a very small slice of content might see changes in June that reverse in July.”

What You Should Expect

As with most broad core updates, Google is giving somewhat mixed signals about how big the impact will be.

On one hand, the company says most sites won’t notice any changes to their presence in search results. At the same time, Google says the update will produce “some widely noticeable effects.”

From past experience, we can predict that sites producing quality content and keeping up with overall Google guidelines will be largely unaffected. Those within more controversial or less reputable industries (online gambling, some medical niches, law, etc.), may be more likely to see some fallout even if they have been doing everything “right”.

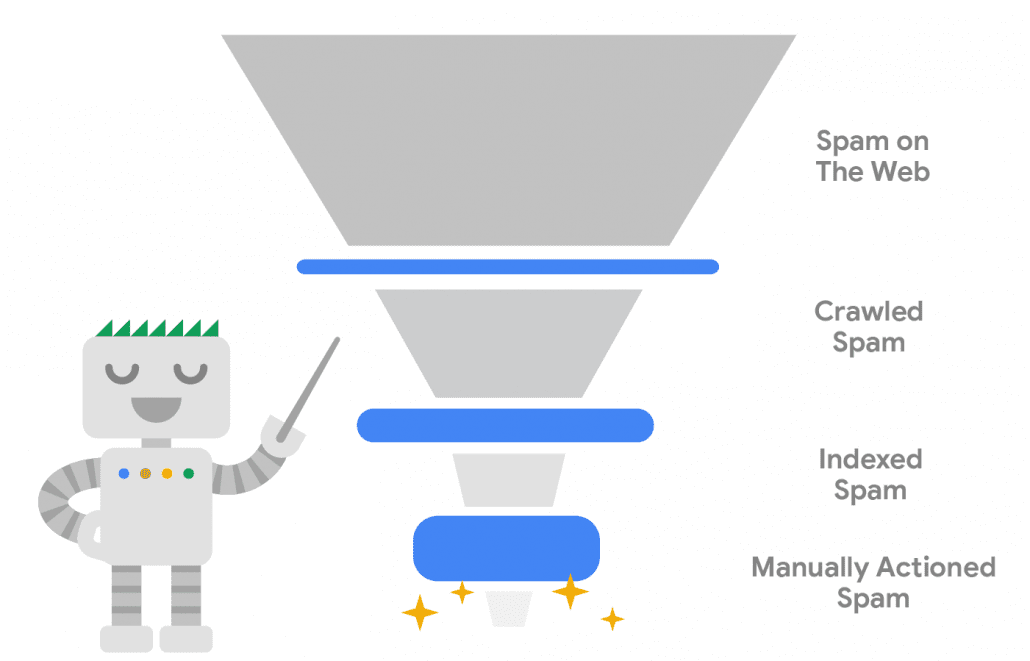

Those using tactics which can be seen as more “spammy” such as republishing content, using user-generated content in overbearing or spammy ways, or using questionable guest-blogging practices may also be likely to see some negative results as the update rolls out.

Ultimately, we will all have to wait and see as the update finishes, which Google says should take about two weeks.

What To Do If You Are Affected

Perhaps one of the most frustrating things about broad core updates is that you can be impacted even if you aren’t doing anything ostensibly “wrong”. Some pages may see negative ranking shifts despite following all of Google’s guidance.

This makes recovering a tricky proposition, but Google has provided some advice for brands negatively impacted.

Specifically, the company suggests asking yourself the following questions about your brand:

Content and Quality Questions

- Does the content provide original information, reporting, research or analysis?

- Does the content provide a substantial, complete or comprehensive description of the topic?

- Does the content provide insightful analysis or interesting information that is beyond obvious?

- If the content draws on other sources, does it avoid simply copying or rewriting those sources and instead provide substantial additional value and originality?

- Does the headline and/or page title provide a descriptive, helpful summary of the content?

- Does the headline and/or page title avoid being exaggerating or shocking in nature?

- Is this the sort of page you’d want to bookmark, share with a friend, or recommend?

- Would you expect to see this content in or referenced by a printed magazine, encyclopedia or book?

Expertise Questions

- Does the content present information in a way that makes you want to trust it, such as clear sourcing, evidence of the expertise involved, background about the author or the site that publishes it, such as through links to an author page or a site’s About page?

- If you researched the site producing the content, would you come away with an impression that it is well-trusted or widely-recognized as an authority on its topic?

- Is this content written by an expert or enthusiast who demonstrably knows the topic well?

- Is the content free from easily-verified factual errors?

- Would you feel comfortable trusting this content for issues relating to your money or your life?

Presentation and Production Questions

- Is the content free from spelling or stylistic issues?

- Was the content produced well, or does it appear sloppy or hastily produced?

- Is the content mass-produced by or outsourced to a large number of creators, or spread across a large network of sites, so that individual pages or sites don’t get as much attention or care?

- Does the content have an excessive amount of ads that distract from or interfere with the main content?

- Does content display well for mobile devices when viewed on them?

Comparative Questions

- Does the content provide substantial value when compared to other pages in search results?

- Does the content seem to be serving the genuine interests of visitors to the site or does it seem to exist solely by someone attempting to guess what might rank well in search engines?

While not hard and fast guidance, these questions can help you evaluate your site and find areas to improve upon before the next broad core update.

Thankfully, in this case we know the next update is coming quite soon – July 2021 – so there is a chance any negative effects from the ongoing update will be short-lived.