After months of rumors and speculation, Google’s AI-powered generative search experience is here – sort of.

The new conversational search tool is available to users as a Google Labs experiment only accessible by signing up for a waitlist. That means it is not replacing the current version of Google Search (at least, not yet), but it is the first public look at what is likely to be the biggest overhaul to Google Search in decades.

Though we at TMO have been unable to get our hands on the new search experience directly, we have gathered all the most important details from those who have to show you what to expect when the generative search experience becomes more widely available.

What The AI-Powered Google Generative Search Experience Looks Like

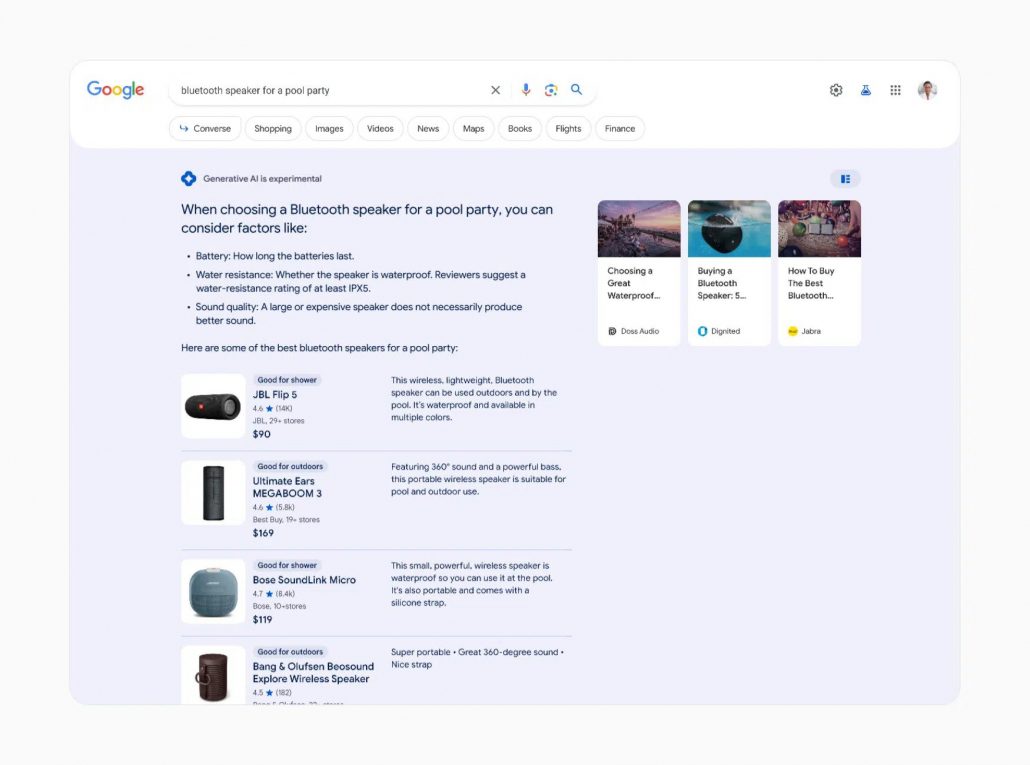

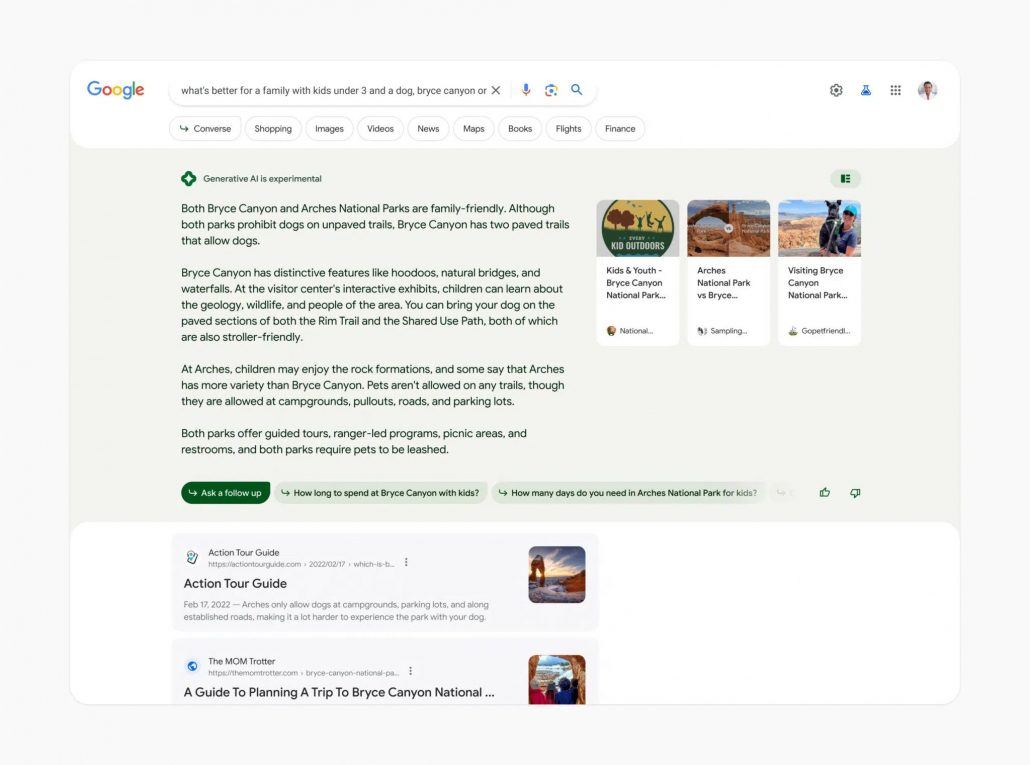

The new Google search experience is present at the very top of Google search results, giving context, answering basic questions, and providing a conversational way to refine your search for better results.

Notably, any AI-generated search information is currently tagged with a label that reads Generative AI is experimental.

Google will also subtly shade AI content based on specific searches to “reflect specific journey types and the query intent itself.” For example, the AI-created search results in the shopping-related search below are placed on a light blue background.

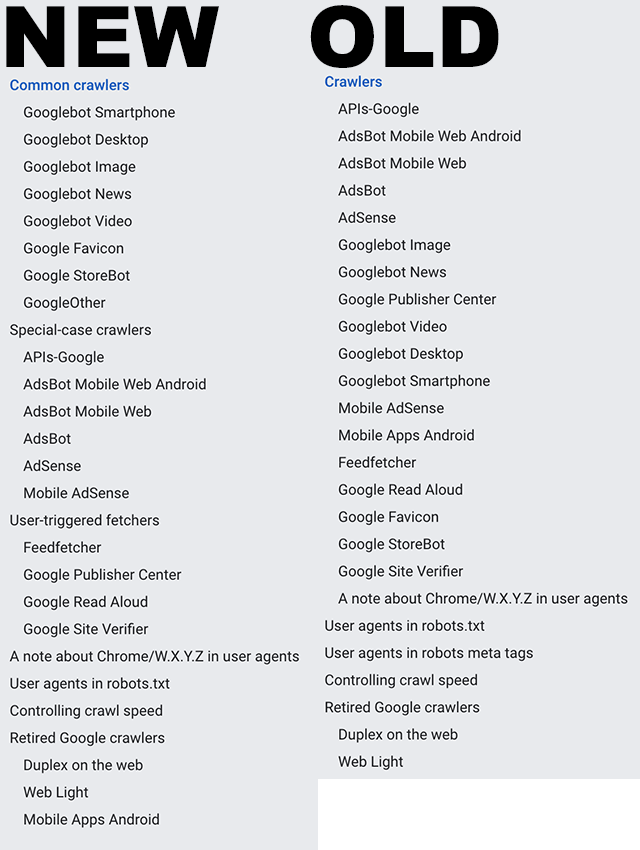

Where Does The Information Come From?

Unlike most current AI-powered tools, Google’s new search experience cites its sources.

Sources are mentioned and linked to, making it easier for users to keep digging.

Additionally, the AI tools can pull from Google’s existing search tools and data, such as Google Shopping product listings and more.

Conversational Search

The biggest change that comes with the new AI-powered search is the ability to follow up queries with follow-ups using context from your previous search. As the announcement explains:

“Context will be carried over from question to question, to help you more naturally continue your exploration. You’ll also find helpful jumping-off points to web content and a range of perspectives that you can dig into.”

What AI Won’t Answer

The AI-powered tool will not provide information for a range of topics that might be sensitive or where accuracy is particularly important For example, Google’s AI tools won’t give answers about giving medicine to a child because of the potential risks involved. Similarly, reports suggest the tool won’t answer questions about financial issues.

Additionally, Google’s AI-powered search will not discuss or provide information on topics that may be “potentially harmful, hateful, or explicit”.

To try out the new Google AI-powered generative search experience for yourself sign up for the waitlist here.