For years, backlinks have been considered one of the most important ranking factors for ranking on Google’s search engine. In 2016, the company even confirmed as much when a search quality senior strategist said that the top ranking factors were links, content, and RankBrain.

According to new comments from Google’s Gary Illyes, an analysis for Google Search, things have changed since then.

What Was Said

During a panel at Pubcon Pro, Illyes was asked directly whether links are still one of the top three ranking factors. In response, here is what he said:

“I think they are important, but I think people overestimate the importance of links. I don’t agree it’s in the top three. It hasn’t been for some time.”

Illyes even went as far as to say there are cases where sites have absolutely 0 links (internal or external), but consistently ranked in the top spot because they provided excellent content.

The Lead Up

Gary Illyes isn’t the first person from Google to suggest that links have lost the SEO weight they used to carry. Last year, Dan Nguyen from the search quality team stated that links had lost their impact during a Google SEO Office Hours session:

“First, backlinks as a signal has a lot less significant impact compared to when Google Search first started out many years ago. We have robust ranking signals, hundreds of them, to make sure that we are able to rank the most relevant and useful results for all queries.’

Other major figures at Google, including Matt Cutts and John Mueller, have predicted this would happen for years. As far back as 2014, Cutts (a leading figure at Google at the time) said:

“I think backlinks still have many, many years left in them. But inevitably, what we’re trying to do is figure out how an expert user would say, this particular page matched their information needs. And sometimes backlinks matter for that. It’s helpful to find out what the reputation of the site or a page is. But, for the most part, people care about the quality of the content on that particular page. So I think over time, backlinks will become a little less important.”

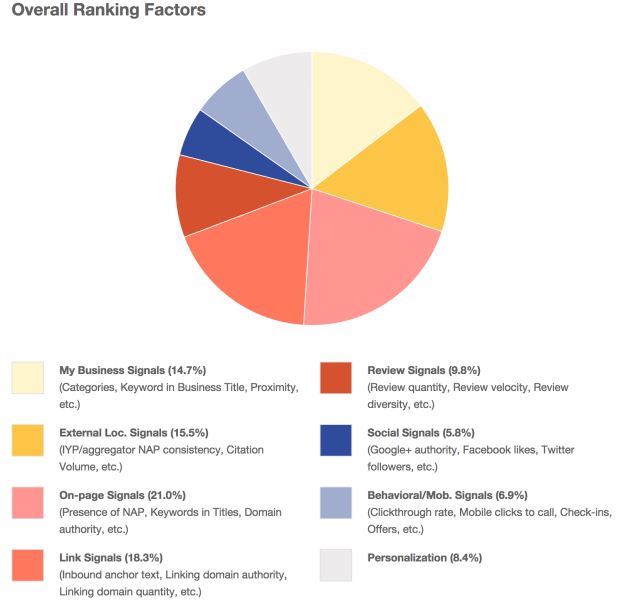

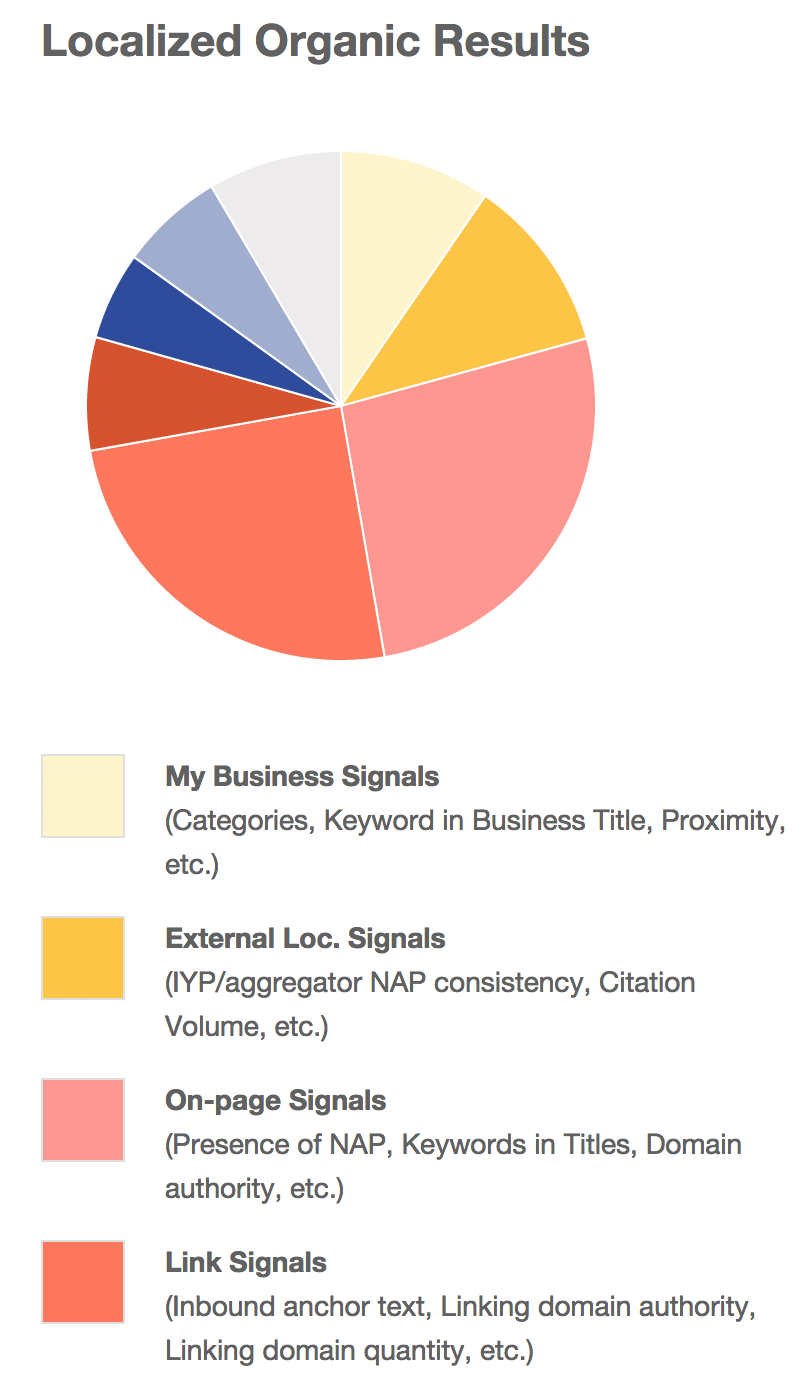

Ultimately, this shift was bound to happen because search has become so much more complex. With each search, Google considers the intent behind the search, the actual query, and personal information to help tailor the search results for each user. With so much in flux, we have reached a point where the most important ranking signals may even differ based on the specific site that is trying to rank.