Internet security and privacy has been at the forefront of many people’s minds with the recent headlines about the NSA keeping data on the public’s online activity, and the issue has had subtle affects on search engines. We’ve seen a small group of searchers migrating to search engines with stricter privacy policies. Of course, those who are truly outraged by the NSA news would expect to see a pretty large shift, but so far the change has been slow. But, it is picking up momentum.

Internet security and privacy has been at the forefront of many people’s minds with the recent headlines about the NSA keeping data on the public’s online activity, and the issue has had subtle affects on search engines. We’ve seen a small group of searchers migrating to search engines with stricter privacy policies. Of course, those who are truly outraged by the NSA news would expect to see a pretty large shift, but so far the change has been slow. But, it is picking up momentum.

More and more people are learning about how Google actually decides which results to show you, as an individual, and many are a little concerned. While Google sees the decision to collect data on users as an attempt to individually tailor results, a few raise their eyebrows at the idea that a search engine and huge corporation is keeping fairly detailed tabs on the internet activities of users. The internet comes with an assumption that our activity is at least fairly private, though that notion is getting chipped away at daily. But, there is still the widespread assumption that our e-mails or simple search habits are our business alone, an assumption that is also being proved wrong.

These privacy issues have a fair number of people looking for search engines that keep our searches completely anonymous and don’t run data collection processes. The most notable solution people seem to be moving to is DuckDuckGo.com, a search engine whose privacy policy claims will not retain any personal information or share that information with other sites. The search engine has been seeing a traffic rise by close to 2 million searches per day since the NSA scandal broke.

There are numerous debates surrounding these issues. Political discourse focuses on the legality and ethical aspects of the government and large corporations working together to collect information on every citizen of the United States (other companies included in the NSA story include Yahoo, Facebook, and Microsoft). But, as SEO professionals, the bigger question is the ethical and practical reality of individually tailored results which rely entirely on data collection.

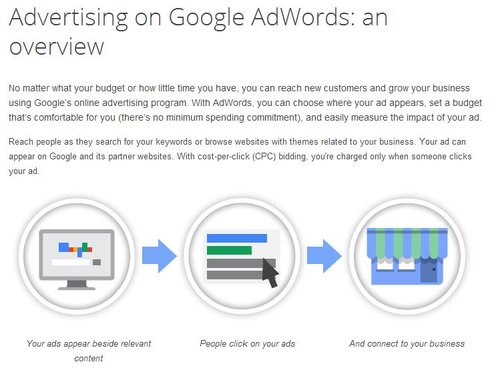

If you’ve ever taken a look at the ads on the edges of websites, you’ve probably noticed that the ads are loosely based on your personal information. The ads reflect your gender, age, location, and sometimes loose search histories. The ads you are shown are chosen based on information your computer relays to almost every site you access. Google acts the same way, but they collect this data and combine it extended data of your search history to deliver search results they believe are more relevant to you.

There is a practicality to this. We all have fine tuned personal tastes, and innately we desire for search engines to show us exactly what we want with the first search result, every time. While poll responses say that the majority of people don’t want personalized search results, are online actions belie our true desires for efficient search. The best way to do this is to gather data and use the data to fine-tune results. On a broad scale, we don’t want results for a grocery store in Los Angeles when we are physically situated in Oklahoma. On a smaller scale, we don’t want Google showing us sites we never go to when our favorite resource for a topic is a few results down the page.

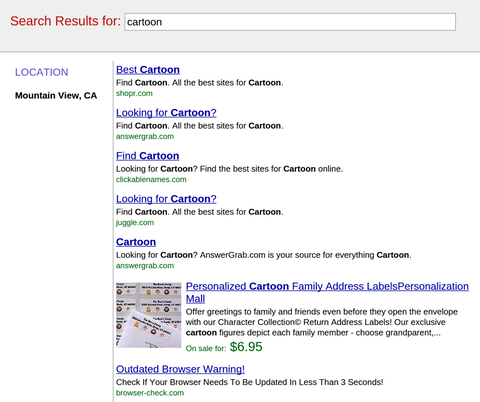

In this respect, the move towards search engines like DuckDuckGo is actually a step back. These privacy-focused search engines are essentially acting how Google used to. They use no personal information, and simply try to show the best results for a specific search. It is a trade of privacy for functionality, and this could possibly explain the slow uptake or migration to these types of search engines. But, people are moving.

The longer the NSA story stays in the news, the more searches DuckDuckGo receives, and this could potentially have a significant affect on the search market in the future. The question is, do we want to sacrifice personal privacy and assumed online anonymity for searches that match our lives? Andrew Lazaunikas recently wrote an article on the debate for Search Engine Journal. He admits DuckDuckGo delivers excellent, unbiased results, but in the end, “when I want to know the best pizza place or car dealer in my area, the local results that Google and Bing shows are superior.”

Lazaunikas isn’t deterred by the aspect, and notes, “I can still get the information I need from DuckDuckGo by modifying my search.” He ends his statement by vowing to use DuckDuckGo more in the future, but the question is whether the public at large will follow. For the moment, it seems as though most people prefer quick easy searches and familiarity to trying out these new search engines.