To understand and rank websites in search results, Google is constantly using tools called crawlers to find and analyze new or recently updated web pages. What may surprise you is that the search engine actually uses three different types of crawlers depending on the situation with web pages. In fact, some of these crawlers may ignore the rules used to control how these crawlers interact with your site.

In the past week, those in the SEO world were surprised by the reveal that the search engine had begun using a new crawler called the GoogleOther crawler to relieve the strain on its main crawlers. Amidst this, I noticed some asking “Google has three different crawlers? I thought it was just Googlebot (the most well-known crawler which has been used by the search engine for over a decade).”

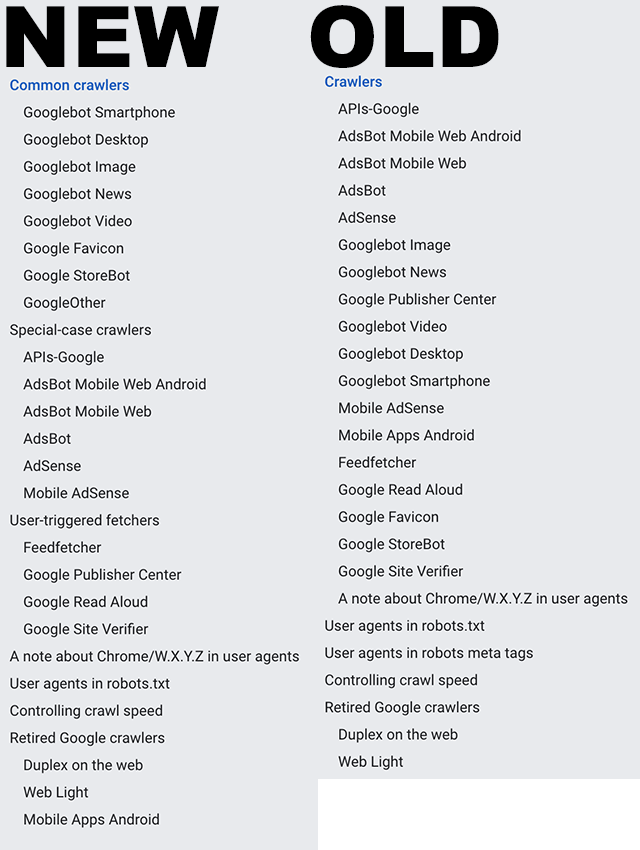

In reality, the company uses quite a few more than just one crawler and it would take a while to go into exactly what each one does as you can see from the list of them (from Search Engine Roundtable) below:

However, Google recently updated a help document called “Verifying Googlebot and other Google crawlers” that breaks all these crawlers into three specific groups.

The Three Types of Google Web Crawlers

Googlebot: The first type of crawler is easily the most well-known and recognized. Googlebots are the tools used to index pages for the company’s main search results. This always observes the rules set out in robots.txt files.

Special-case Crawlers: In some cases, Google will create crawlers for very specific functions, such as AdsBot which assesses web page quality for those running ads on the platform. Depending on the situation, this may include ignoring the rules dictated in a robots.txt file.

User-triggered Fetchers: When a user does something that requires for the search engine to then verify information (when the Google Site Verifier is triggered by the site owner, for example), Google will use special robots dedicated to these tasks. Because this is initiated by the user to complete a specific process, these crawlers ignore robots.txt rules entirely.

Why This Matters

Understanding how Google analyzes and processes the web can allow you to optimize your site for the best performance better. Additionally, it is important to identify the crawlers used by Google and ensure they are blocked in analytics tools or they can appear as false visits or impressions.

For more, read the full help article here.