Google is rolling out several new updates and features for Google Search aimed at making it easier for users to find the content they are looking for.

Among the announcements, the search engine revealed new ways to use augmented reality (AR) and Google Lens to shop for products and find information like how to get a dish you’ve been craving from a nearby restaurant.

Below, we will go over the announcements one by one to break down the details and when you can start using these tools to help users find your products and services.

Introducing Multisearch For Food

Google is working to unite its search tools including Google Lens and Maps so that users can seamlessly combine types of searches into one powerful search.

For example, using multisearch, you can now take a picture of a meal in Google Lens and add a text modifier such as “near me” to discover what restaurants serve that meal.

“This new way of searching will help me find local businesses in my community, so I can more easily support neighborhood shops during the holidays,” said Cindy Huynh, Product Manager of Google Lens.

This feature is rolling out for all English-language U.S. users today.

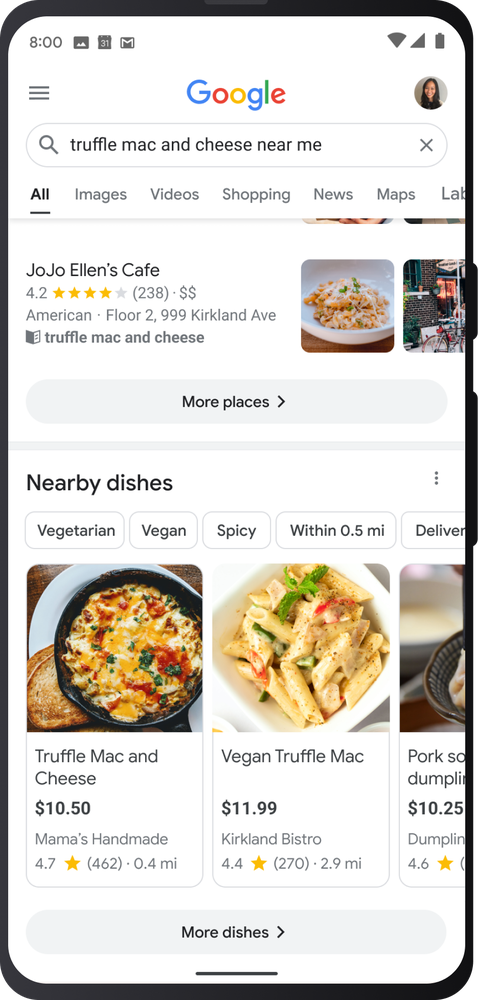

Search For Specific Dishes

Along with the announcement above, Google revealed that users can also simply search for specific dishes by name to find information including who serves this dish, pricing, ingredients, and more

As Google’s Sophia Lin says:

“I often crave comfort food this time of year — like truffle mac and cheese — but I don’t always know where to find it. Lucky for foodies, starting today, you can now search for the exact dish you’re craving and see all the places near you that serve it.”

AR Shopping Tools for Shoes and Makeup

Seeing how new products will look once you leave the store has always been difficult for consumers, but Google is using augmented reality to visualize potential product purchases before you buy.

The first way Google is doing this is by allowing you to see potential show purchases in your living space.

Starting today, shoppers can not only see high-quality 3D models of shoes. They can spin them around, zoom in on details, and even see the shoes as they would look in your typical surroundings.

Importantly, this is available for any brand with 3D assets of their shoes or home goods.

Additionally, Google has upgraded its AR shopping tools for makeup to include a broader range of skin tones and models with a more diverse set of features.

The search engine has added over 150 new models with a diverse spectrum of skin tones, ages, genders, face shapes, ethnicities, and skin tones to test cosmetics on.